Most program managers assess the value and impact of their work all the time when they ask questions, consult partners, make assessments, and obtain feedback. They then use the information collected to improve the program. Indeed, such informal assessments fit nicely into a broad definition of evaluation as the “examination of the worth, merit, or significance of an object.” [4] And throughout this manual, the term “program” will be defined as “any set of organized activities supported by a set of resources to achieve a specific and intended result.” This definition is intentionally broad so that almost any organized public health action can be seen as a candidate for program evaluation:

What distinguishes program evaluation from ongoing informal assessment is that program evaluation is conducted according to a set of guidelines. With that in mind, this manual defines program evaluation as “the systematic collection of information about the activities, characteristics, and outcomes of programs to make judgments about the program, improve program effectiveness, and/or inform decisions about future program development.” [5] Program evaluation does not occur in a vacuum; rather, it is influenced by real-world constraints. Evaluation should be practical and feasible and conducted within the confines of resources, time, and political context. Moreover, it should serve a useful purpose, be conducted in an ethical manner, and produce accurate findings. Evaluation findings should be used both to make decisions about program implementation and to improve program effectiveness.

Many different questions can be part of a program evaluation, depending on how long the program has been in existence, who is asking the question, and why the information is needed.

In general, evaluation questions fall into these groups:

All of these are appropriate evaluation questions and might be asked with the intention of documenting program progress, demonstrating accountability to funders and policymakers, or identifying ways to make the program better.

Evaluation Supplements Other Types of Reflection and Data CollectionPlanning asks, “What are we doing and what should we do to achieve our goals?” By providing information on progress toward organizational goals and identifying which parts of the program are working well and/or poorly, program evaluation sets up the discussion of what can be changed to help the program better meet its intended goals and objectives.

Performance MeasurementIncreasingly, public health programs are accountable to funders, legislators, and the general public. Many programs do this by creating, monitoring, and reporting results for a small set of markers and milestones of program progress. Such “performance measures” are a type of evaluation—answering the question “How are we doing?” More importantly, when performance measures show significant or sudden changes in program performance, program evaluation efforts can be directed to the troubled areas to determine “Why are we doing poorly or well?”

Linking program performance to program budget is the final step in accountability. Called “activity-based budgeting” or “performance budgeting,” it requires an understanding of program components and the links between activities and intended outcomes. The early steps in the program evaluation approach (such as logic modeling) clarify these relationships, making the link between budget and performance easier and more apparent.

Surveillance and Program EvaluationWhile the terms surveillance and evaluation are often used interchangeably, each makes a distinctive contribution to a program, and it is important to clarify their different purposes. Surveillance is the continuous monitoring or routine data collection on various factors (e.g., behaviors, attitudes, deaths) over a regular interval of time. Surveillance systems have existing resources and infrastructure. Data gathered by surveillance systems are invaluable for performance measurement and program evaluation, especially of longer term and population-based outcomes. In addition, these data serve an important function in program planning and “formative” evaluation by identifying key burden and risk factors—the descriptive and analytic epidemiology of the public health problem. There are limits, however, to how useful surveillance data can be for evaluators. For example, some surveillance systems such as the Behavioral Risk Factor Surveillance System (BRFSS), Youth Tobacco Survey (YTS), and Youth Risk Behavior Survey (YRBS) can measure changes in large populations, but have insufficient sample sizes to detect changes in outcomes for more targeted programs or interventions. Also, these surveillance systems may have limited flexibility to add questions for a particular program evaluation.

In the best of all worlds, surveillance and evaluation are companion processes that can be conducted simultaneously. Evaluation may supplement surveillance data by providing tailored information to answer specific questions about a program. Data from specific questions for an evaluation are more flexible than surveillance and may allow program areas to be assessed in greater depth. For example, a state may supplement surveillance information with detailed surveys to evaluate how well a program was implemented and the impact of a program on participants’ knowledge, attitudes, and behavior. Evaluators can also use qualitative methods (e.g., focus groups, semi-structured or open-ended interviews) to gain insight into the strengths and weaknesses of a particular program activity.

Research and Program EvaluationBoth research and program evaluation make important contributions to the body of knowledge, but fundamental differences in the purpose of research and the purpose of evaluation mean that good program evaluation need not always follow an academic research model. Even though some of these differences have tended to break down as research tends toward increasingly participatory models [6] and some evaluations aspire to make statements about attribution, “pure” research and evaluation serve somewhat different purposes (See “Distinguishing Principles of Research and Evaluation” table, page 4), nicely summarized in the adage “Research seeks to prove; evaluation seeks to improve.” Academic research focuses primarily on testing hypotheses; a key purpose of program evaluation is to improve practice. Research is generally thought of as requiring a controlled environment or control groups. In field settings directed at prevention and control of a public health problem, this is seldom realistic. Of the ten concepts contrasted in the table, the last three are especially worth noting. Unlike pure academic research models, program evaluation acknowledges and incorporates differences in values and perspectives from the start, may address many questions besides attribution, and tends to produce results for varied audiences.

Distinguishing Principles of Research and Evaluation Research PrinciplesProgram Evaluation Principles

Program Evaluation PrinciplesProgram Evaluation Principles

Scientific method

Scientific method

Framework for program evaluation

Framework for program evaluation

Decision Making

Decision Making

Investigator-controlled

Research PrinciplesInvestigator-controlled

Stakeholder-controlled

Program Evaluation PrinciplesStakeholder-controlled

Standards

Standards

Validity

Validity

Repeatability program evaluation standards

Repeatability program evaluation standards

Questions

Questions

Facts

Facts

Values

Values

Design

Design

Isolate changes and control circumstances

Isolate changes and control circumstances

Incorporate changes and account for circumstances

Incorporate changes and account for circumstances

Data Collection

Data Collection

Sources

Indicators/Measures

Sources

Indicators/Measures

Sources

Indicators/Measures

Sources

Indicators/Measures

Analysis & Synthesis

Analysis & Synthesis

Timing

Scope

Timing

Scope

Timing

Scope

Timing

Scope

Judgments

Judgments

Implicit

Implicit

Explicit

Explicit

Conclusions

Conclusions

Attribution

Attribution

Attribution and contribution

Attribution and contribution

Uses

Uses

Disseminate to interested audiences

Disseminate to interested audiences

Feedback to stakeholders

Disseminate to interested audiences

Feedback to stakeholders

Disseminate to interested audiences

Program staff may be pushed to do evaluation by external mandates from funders, authorizers, or others, or they may be pulled to do evaluation by an internal need to determine how the program is performing and what can be improved. While push or pull can motivate a program to conduct good evaluations, program evaluation efforts are more likely to be sustained when staff see the results as useful information that can help them do their jobs better.

Data gathered during evaluation enable managers and staff to create the best possible programs, to learn from mistakes, to make modifications as needed, to monitor progress toward program goals, and to judge the success of the program in achieving its short-term, intermediate, and long-term outcomes. Most public health programs aim to change behavior in one or more target groups and to create an environment that reinforces sustained adoption of these changes, with the intention that changes in environments and behaviors will prevent and control diseases and injuries. Through evaluation, you can track these changes and, with careful evaluation designs, assess the effectiveness and impact of a particular program, intervention, or strategy in producing these changes.

Recognizing the importance of evaluation in public health practice and the need for appropriate methods, the World Health Organization (WHO) established the Working Group on Health Promotion Evaluation. The Working Group prepared a set of conclusions and related recommendations to guide policymakers and practitioners. [7] Recommendations immediately relevant to the evaluation of comprehensive public health programs include:

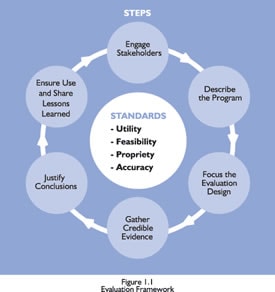

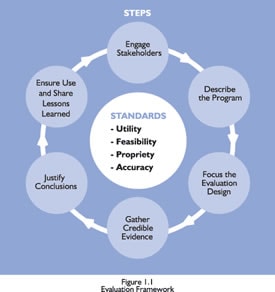

Program evaluation is one of ten essential public health services [8] and a critical organizational practice in public health. [9] Until recently, however, there has been little agreement among public health officials on the principles and procedures for conducting such studies. In 1999, CDC published Framework for Program Evaluation in Public Health and some related recommendations. [10] The Framework, as depicted in Figure 1.1, defined six steps and four sets of standards for conducting good evaluations of public health programs.

The underlying logic of the Evaluation Framework is that good evaluation does not merely gather accurate evidence and draw valid conclusions, but produces results that are used to make a difference. To maximize the chances evaluation results will be used, you need to create a “market” before you create the “product”—the evaluation. You determine the market by focusing evaluations on questions that are most salient, relevant, and important. You ensure the best evaluation focus by understanding where the questions fit into the full landscape of your program description, and especially by ensuring that you have identified and engaged stakeholders who care about these questions and want to take action on the results.

The steps in the CDC Framework are informed by a set of standards for evaluation. [11] These standards do not constitute a way to do evaluation; rather, they serve to guide your choice from among the many options available at each step in the Framework. The 30 standards cluster into four groups:

Utility: Who needs the evaluation results? Will the evaluation provide relevant information in a timely manner for them?

Feasibility: Are the planned evaluation activities realistic given the time, resources, and expertise at hand?

Propriety: Does the evaluation protect the rights of individuals and protect the welfare of those involved? Does it engage those most directly affected by the program and changes in the program, such as participants or the surrounding community?

Accuracy: Will the evaluation produce findings that are valid and reliable, given the needs of those who will use the results?

Sometimes the standards broaden your exploration of choices. Often, they help reduce the options at each step to a manageable number. For example, in the step “Engaging Stakeholders,” the standards can help you think broadly about who constitutes a stakeholder for your program, but simultaneously can reduce the potential list to a manageable number by posing the following questions: (Utility) Who will use these results? (Feasibility) How much time and effort can be devoted to stakeholder engagement? (Propriety) To be ethical, which stakeholders need to be consulted, those served by the program or the community in which it operates? (Accuracy) How broadly do you need to engage stakeholders to paint an accurate picture of this program?

Similarly, there are unlimited ways to gather credible evidence (Step 4). Asking these same kinds of questions as you approach evidence gathering will help identify ones what will be most useful, feasible, proper, and accurate for this evaluation at this time. Thus, the CDC Framework approach supports the fundamental insight that there is no such thing as the right program evaluation. Rather, over the life of a program, any number of evaluations may be appropriate, depending on the situation.

How to Establish an Evaluation Team and Select a Lead Evaluator Characteristics of a Good EvaluatorGood evaluation requires a combination of skills that are rarely found in one person. The preferred approach is to choose an evaluation team that includes internal program staff, external stakeholders, and possibly consultants or contractors with evaluation expertise.

An initial step in the formation of a team is to decide who will be responsible for planning and implementing evaluation activities. One program staff person should be selected as the lead evaluator to coordinate program efforts. This person should be responsible for evaluation activities, including planning and budgeting for evaluation, developing program objectives, addressing data collection needs, reporting findings, and working with consultants. The lead evaluator is ultimately responsible for engaging stakeholders, consultants, and other collaborators who bring the skills and interests needed to plan and conduct the evaluation.

Although this staff person should have the skills necessary to competently coordinate evaluation activities, he or she can choose to look elsewhere for technical expertise to design and implement specific tasks. However, developing in-house evaluation expertise and capacity is a beneficial goal for most public health organizations. Of the characteristics of a good evaluator listed in the text box below, the evaluator’s ability to work with a diverse group of stakeholders warrants highlighting. The lead evaluator should be willing and able to draw out and reconcile differences in values and standards among stakeholders and to work with knowledgeable stakeholder representatives in designing and conducting the evaluation.

Seek additional evaluation expertise in programs within the health department, through external partners (e.g., universities, organizations, companies), from peer programs in other states and localities, and through technical assistance offered by CDC. [12]

You can also use outside consultants as volunteers, advisory panel members, or contractors. External consultants can provide high levels of evaluation expertise from an objective point of view. Important factors to consider when selecting consultants are their level of professional training, experience, and ability to meet your needs. Overall, it is important to find a consultant whose approach to evaluation, background, and training best fit your program’s evaluation needs and goals. Be sure to check all references carefully before you enter into a contract with any consultant.

To generate discussion around evaluation planning and implementation, several states have formed evaluation advisory panels. Advisory panels typically generate input from local, regional, or national experts otherwise difficult to access. Such an advisory panel will lend credibility to your efforts and prove useful in cultivating widespread support for evaluation activities.

Evaluation team members should clearly define their respective roles. Informal consensus may be enough; others prefer a written agreement that describes who will conduct the evaluation and assigns specific roles and responsibilities to individual team members. Either way, the team must clarify and reach consensus on the:

The agreement should also include a timeline and a budget for the evaluation.

Organization of This ManualThis manual is organized by the six steps of the CDC Framework. Each chapter will introduce the key questions to be answered in that step, approaches to answering those questions, and how the four evaluation standards might influence your approach. The main points are illustrated with one or more public health examples that are composites inspired by actual work being done by CDC and states and localities. [13] Some examples that will be referred to throughout this manual:

Affordable Home Ownership ProgramThe program aims to provide affordable home ownership to low-income families by identifying and linking funders/sponsors, construction volunteers, and eligible families. Together, they build a house over a multi-week period. At the end of the construction period, the home is sold to the family using a no-interest loan.

Childhood Lead Poisoning Prevention (CLPP)Lead poisoning is the most widespread environmental hazard facing young children, especially in older inner-city areas. Even at low levels, elevated blood lead levels (EBLL) have been associated with reduced intelligence, medical problems, and developmental problems. The main sources of lead poisoning in children are paint and dust in older homes with lead-based paint. Public health programs address the problem through a combination of primary and secondary prevention efforts. A typical secondary prevention program at the local level does outreach and screening of high-risk children, identifying those with EBLL, assessing their environments for sources of lead, and case managing both their medical treatment and environmental corrections. However, these programs must rely on others to accomplish the actual medical treatment and the reduction of lead in the home environment.

Provider Education in ImmunizationA common initiative of state immunization programs is comprehensive provider education programs to train and motivate private providers to provide more immunizations. A typical program includes a newsletter distributed three times per year to update private providers on new developments and changes in policy, and provide a brief education on various immunization topics; immunization trainings held around the state conducted by teams of state program staff and physician educators on general immunization topics and the immunization registry; a Provider Tool Kit on how to increase immunization rates in their practice; training of nursing staff in local health departments who then conduct immunization presentations in individual private provider clinics; and presentations on immunization topics by physician peer educators at physician grand rounds and state conferences.

Each chapter also provides checklists and worksheets to help you apply the teaching points.

[4] Scriven M. Minimalist theory of evaluation: The least theory that practice requires. American Journal of Evaluation 1998;19:57-70.

[5] Patton MQ. Utilization-focused evaluation: The new century text. 3rd ed. Thousand Oaks, CA: Sage, 1997.

[6] Green LW, George MA, Daniel M, Frankish CJ, Herbert CP, Bowie WR, et al. Study of participatory research in health promotion: Review and recommendations for the development of participatory research in health promotion in Canada . Ottawa, Canada : Royal Society of Canada , 1995.

[7] WHO European Working Group on Health Promotion Evaluation. Health promotion evaluation: Recommendations to policy-makers: Report of the WHO European working group on health promotion evaluation. Copenhagen, Denmark : World Health Organization, Regional Office for Europe, 1998.

[8] Public Health Functions Steering Committee. Public health in America . Fall 1994. Available at . January 1, 2000.

[9] Dyal WW. Ten organizational practices of public health: A historical perspective. American Journal of Preventive Medicine 1995;11(6)Suppl 2:6-8.

[10] Centers for Disease Control and Prevention. op cit.

[11] Joint Committee on Standards for Educational Evaluation. The program evaluation standards: How to assess evaluations of educational programs. 2nd ed. Thousand Oaks, CA: Sage Publications, 1994.

[12] CDC’s Prevention Research Centers (PRC) program is an additional resource. The PRC program is a national network of 24 academic research centers committed to prevention research and the ability to translate that research into programs and policies. The centers work with state health departments and members of their communities to develop and evaluate state and local interventions that address the leading causes of death and disability in the nation. Additional information on the PRCs is available at www.cdc.gov/prc/index.htm.

[13] These cases are composites of multiple CDC and state and local efforts that have been simplified and modified to better illustrate teaching points. While inspired by real CDC and community programs, they are not intended to reflect the current